How I Sped Up My Python CLI By 25%

I recently noticed that the Yahoo Finance stock summary command line interface (CLI) I made seemed to be slowing down. Seeking to understand what was happening in my code, I remembered Python has multiple profilers available like Scalene, line_profiler, cProfile and pyinstrument. In this case, I was running my code on Python version 3.11.

First, I tried cProfile from the Python standard library. It is nice to have without any install required! However, I found its output to be tough to interpret. I also remembered I liked a talk I saw about Scalene, which gave a thorough overview of several Python profilers and how they're different. So next, I tried Scalene. Finally, I found pyinstrument and can safely say it is now my favorite Python profiler. This post will focus on how I used pyinstrument to make my command line tool faster.

Install pyinstrument with pip

pip install pyinstrument

I preferred the format in which pyinstrument presented the modules, functions and time they consumed in a tree structure. Scalene's percentage-based diagnosis was useful also. Scalene showed the specific lines where code was bottlenecked, whereas pyinstrument showed the time spent in each module and function. I liked that I could see time of specific functions from the external modules I was using with pyinstrument. For example, the beautiful soup and rich modules both consumed shockingly little time. However, the pandas module took a whole second.

Just importing the pandas module and doing nothing else was taking up to and sometimes over a second each time my CLI ran. On a script that takes about four seconds to execute, one second is 25% of the total run time! Once I realized this, I decided to only import the pandas module if my CLI's --csv argument was given. I was only using pandas to sort stocks and write a CSV. It wasn't critical functionality for my CLI.

My CLI script accepts a stock ticker as an argument. The below command fetches a stock report from Yahoo Finance and prints to the terminal. Swapping out "python" for pyinstrument runs the script and prints a pyinstrument report to your console.

Fetch a stock report from Yahoo.

pyinstrument finsou.py -s GOOG

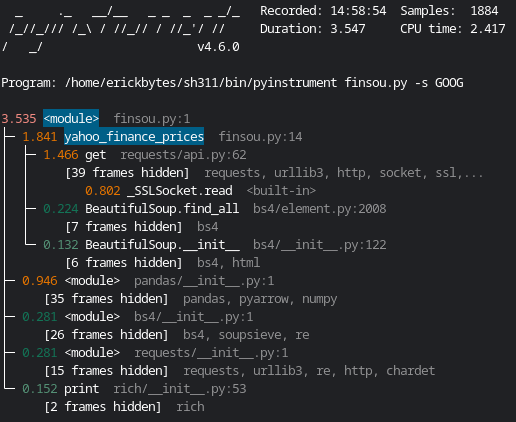

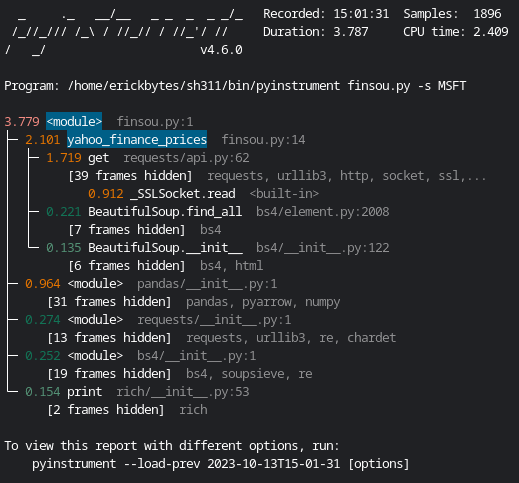

pyinstrument Results With Normal Pandas Import

GOOG, Google

MSFT, Microsoft

The line for the pandas module looks like this:

0.946 <module> pandas/__init__.py:1

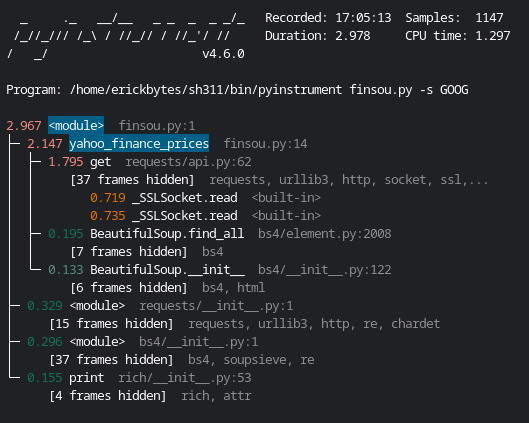

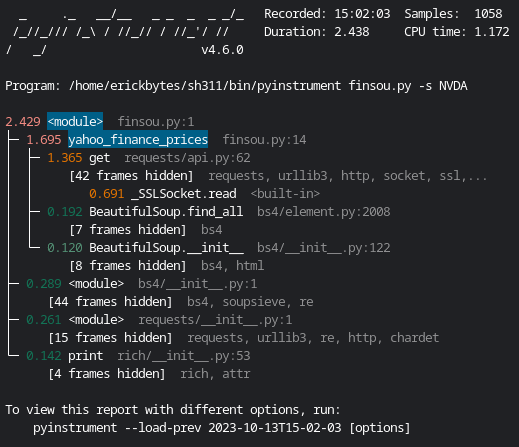

pyinstrument Results With Pandas Import Only If Necessary

After changing the pandas module to only import if needed, it is no longer eating almost a second of time. As a result, the script runs about second faster each time! Below are the pyinstrument reports for two different stocks after changing my pandas import to only be called if it was actually used:

GOOG, Google

NVDA, Nvidia

Sidebar: HTTP Request Volatility

The time that the script runs fluctuates about half a second to a few seconds based on the HTTP get request. It lags even more if my internet connection is weaker or Yahoo throttles my request because I've made too many in a short period of time. My time savings weren't gained from tinkering with the HTTP request, even though that was a time-eater. I noticed the requests module get request tends to fluctuate and sometimes causes an extra delay.

Simplified Python Example to Achieve Speed Gains

Below shows the method I used to achieve a faster CLI. Heads up, this code will not work if you run it. It's only meant to explain how I my code faster. You can find the actual script where I made this improvement here on Github.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | import argparse from bs4 import BeautifulSoup from rich import print as rprint # Original import --> lazy import only if csv argument given: import pandas as pd def yahoo_finance_prices(url, stock): return "Stonk went up.", "1000%" parser = argparse.ArgumentParser( prog="finsou.py", description="Beautiful Financial Soup", epilog="fin soup... yum yum yum yum", ) parser.add_argument("-s", "--stocks", help="comma sep. stocks or portfolio.txt") parser.add_argument("-c", "--csv", help='set csv export with "your_csv.csv"') args = parser.parse_args() prices = list() for stock in args.stocks: summary, ah_pct_change = yahoo_finance_prices(url, stock) rprint(f"[steel_blue]{summary}[/steel_blue]\n") prices.append([stock, summary, url, ah_pct_change]) if args.csv: # Importing here shaves 1 second off the CLI when CSV is not required. import pandas as pd cols = ["Stock", "Price_Summary", "URL", "AH_%_Change"] stock_prices = pd.DataFrame(prices, columns=cols) stock_prices.to_csv(args.csv, index=False) |

Make It Fast

"Make it work, make it better, make it fast." - Kent Beck

That's how I sped up my Python CLI by 25%. This method bucks the convention of keeping your import statements at the top of your script. In my case, it's a hobby project so I feel ok with making the trade-off of less readable code for a snappier CLI experience. You could also consider using the standard library csv module instead of pandas.

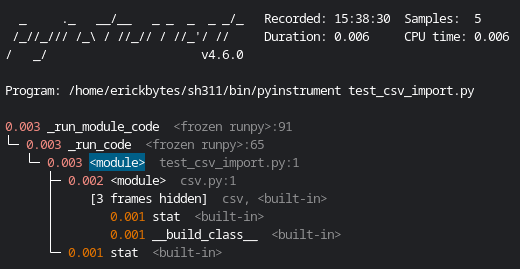

For Comparison, An import csv pyinstrument Report

I clocked the csv module import at 0.003 or three thousandths of a second with pyinstrument. That's insanely fast compared to pandas. I chose to make a quick fix by shifting the import but using the csv module could be a better long-term solution for speeding up your scripts.

Supplementary Reading

Making a Yahoo Stock Price CLI With Python

The Python Profilers, Python Documentation